Today, the implementation of the heavy and monotonous work without robots already seems impossible. However, the robots in everyday life a little used, they have not yet received access to our private lives. But, however, is likely to be held in the near future. Continue reading

Today, the implementation of the heavy and monotonous work without robots already seems impossible. However, the robots in everyday life a little used, they have not yet received access to our private lives. But, however, is likely to be held in the near future. Continue reading

Category Archives: Artificial Intelligence

Ninomiya Kun Robot reads aloud books

Japanese scientists have developed a robot that can read paper books. Ninomiya Kun developed in Waseda University (Waseda University) and Research Centre (Production and Systems Research Center, IPSRC) and was presented June 11, 2009 at the Japanese Fair robots in Kitakyushu (Kitakyushu), where he entertained visitors to the reading of fairy tales. Continue reading

Japanese scientists have developed a robot that can read paper books. Ninomiya Kun developed in Waseda University (Waseda University) and Research Centre (Production and Systems Research Center, IPSRC) and was presented June 11, 2009 at the Japanese Fair robots in Kitakyushu (Kitakyushu), where he entertained visitors to the reading of fairy tales. Continue reading

Will the computer brains or in brains of computers?

A year ago, IBM has received funds to develop software that can simulate the human brain. This is quite a natural step in the evolution of such studies. Scientists have tried to simulate the brain of rats and other animals. The main problem was not even the fact that there is no complete clarity in the understanding of the brain of laboratory mice, for example. Continue reading

A year ago, IBM has received funds to develop software that can simulate the human brain. This is quite a natural step in the evolution of such studies. Scientists have tried to simulate the brain of rats and other animals. The main problem was not even the fact that there is no complete clarity in the understanding of the brain of laboratory mice, for example. Continue reading

Robot HRP-4C sang and danced

Humanoid robot HRP-4C this year participated in various activities and in various roles. Often, in the role of fashion models. This is understandable because the form of a robot, gait, gestures humanoid robot like a human. The robot, developed by the Japanese National Institute of Science and Technology, recognizes it, says a well-developed facial expressions. Continue reading

Humanoid robot HRP-4C this year participated in various activities and in various roles. Often, in the role of fashion models. This is understandable because the form of a robot, gait, gestures humanoid robot like a human. The robot, developed by the Japanese National Institute of Science and Technology, recognizes it, says a well-developed facial expressions. Continue reading

Robots already kissed!

Thomas and Janet, the first two robots who dare to kiss. The kiss between robots was in December last year during the presentation of scenes from the thriller “The Phantom of the Opera” at the National Taiwan University of Science and Technology. Continue reading

Thomas and Janet, the first two robots who dare to kiss. The kiss between robots was in December last year during the presentation of scenes from the thriller “The Phantom of the Opera” at the National Taiwan University of Science and Technology. Continue reading

Avicenna was a robot, and spoke in Arabic

In the Laboratory of Information Technologies of the University of Al-Ain (United Arab Emirates) has appeared the world’s first robot that spoke Arabic. More than a year a group of researchers, including university students, worked on the creation of this unique in its kind of mechanism. The appearance of the robot given it is in the spirit of the ancient and wise of the East. Continue reading

In the Laboratory of Information Technologies of the University of Al-Ain (United Arab Emirates) has appeared the world’s first robot that spoke Arabic. More than a year a group of researchers, including university students, worked on the creation of this unique in its kind of mechanism. The appearance of the robot given it is in the spirit of the ancient and wise of the East. Continue reading

Intelligent Autonomous Robots are the new generation

At least six fields of research today advanced robotics structure: one that relates the robot with its environment, the behavioral, cognitive, or developmental epigenetics, the evolutionary and biorrobótica. It’s a big field of interdisciplinary study that relies on the mechanical, electrical, electronics and informatics, as well as physical science, anatomy, psychology, biology, zoology and ethology, among others. The basis of this research is embodied Cognitive Science and the New AI. Its purpose: lighting intelligent and autonomous robots that reason, behave, evolve and act like people. By Sergio Moriello.multidisciplinary study, which relies largely on the engineering (mechanical, electrical, electronics and computers) and science (physics, anatomy, psychology, biology, zoology, ethology, etc.).. Refers to highly complex automated systems that have an articulated mechanical structure, governed by an electronic control system, and characteristics of autonomy, reliability, versatility and mobility.

At least six fields of research today advanced robotics structure: one that relates the robot with its environment, the behavioral, cognitive, or developmental epigenetics, the evolutionary and biorrobótica. It’s a big field of interdisciplinary study that relies on the mechanical, electrical, electronics and informatics, as well as physical science, anatomy, psychology, biology, zoology and ethology, among others. The basis of this research is embodied Cognitive Science and the New AI. Its purpose: lighting intelligent and autonomous robots that reason, behave, evolve and act like people. By Sergio Moriello.multidisciplinary study, which relies largely on the engineering (mechanical, electrical, electronics and computers) and science (physics, anatomy, psychology, biology, zoology, ethology, etc.).. Refers to highly complex automated systems that have an articulated mechanical structure, governed by an electronic control system, and characteristics of autonomy, reliability, versatility and mobility.

In essence, the “autonomous intelligent robots” are dynamic systems consisting of an electronic controller coupled to a mechanical body. Thus, these machines require adequate sensory systems (to perceive the environment in which they operate), a precise mechanical structure adaptable (to have a certain physical skills of locomotion and manipulation) of complex effector systems (for running the assignments) and sophisticated control systems (to carry out corrective actions when necessary) [Moriello, 2005, p. 172].

Situated Robotics (Situated Robotics)

This approach deals with robots that are embedded in complex and often dynamically changing [Mataric, 2002]. It is based on two central ideas [Florian, 2003] [Muñoz Moreno, 2000] [Innocenti Badano, 2000]: robots) “are embodied” (embodiment), ie, have a suitable physical body to experience its environment so direct where their actions have immediate feedback on their own perceptions, and b) are situated “(situatedness), ie, they are embedded within an environment, interact with the world, which directly influences-its-on behavior.

Obviously, the complexity of the environment has a close relationship with the complexity of the control system. Indeed, if the robot has to react quickly and intelligently in a dynamic and challenging environment, the problem of control becomes very difficult. If the robot, however, need not answer quickly, reducing the complexity required to develop control.

Within this paradigm, there are several subparadigmas: the “Behavior-based robotics,” the “cognitive robotics”, the “epigenetic robotics”, the “evolutionary robotics” and “biomimetic robotics.

Behavior-Based Robotics and Behavior (Behavior-Base Robotics)

This approach uses behavioral principles: robots generate a behavior only when stimulated, ie respond to changes in their local environment (as when someone accidentally touches a hot object). Here, the designer divides tasks into many different basic behaviors, each of which runs on a separate layer of the control system of the robot.

Typically, these modules (behaviors) may be to avoid obstacles, walking, lifting, etc.. The intelligent features of the system, such as perception, planning, modeling, learning, etc.. emerge from interaction between the various modules and the physical environment where the robot is immersed. The system-control-Fully distributed incrementally builds, layer by layer, through a process of trial and error, and each layer is only responsible for basic behavior [Moriello, 2005, p. 177 / 8].

The behavior-based systems are capable of reacting in real time, as calculated directly from the actions of perceptions (through a set of correspondence rules “situation-action). It is important to note that the number of layers increases the complexity of the problem. Thus, a very complex task may be beyond the ability of the designer (it was hard to define all the layers, their interrelationships and dependencies) [Pratiharas, 2003].

Another drawback is that due to the presence of several individual behavior and dynamics of interaction with the world, it is often difficult to say that a series of actions in particular has been the product of a particular behavior. Sometimes several behaviors simultaneously working or are exchanging rapidly.

Although intelligence may reach the insect, probably built systems from this approach have limited skills, as they have internal representations [Dawson, 2002]. Indeed, this type of robots present a great difficulty to execute complex tasks and in the simplest, no guarantee the best solution as optimal.

Cognitive Robotics (Cognitive Robotics)

This approach uses techniques from the field of Cognitive Science. It deals with deploying robots that perceive, reason and act in dynamic environments, unknown and unpredictable. Such robots must have cognitive functions that involve high-level reasoning, for example, about goals, actions, time, cognitive states of other robots, when and what to perceive, learn from experience, and so on.

For that, they must possess an internal symbolic model and their local environment, and sufficient capacity for logical reasoning to make decisions and to perform the tasks necessary to achieve its objectives. In short, this line of work is responsible for implementing cognitive characteristics in robots, such as perception, concept formation, attention, learning, memory, short and long term, etc.. [Bogner, Maletic, Franklin, 2000].

If we achieve that the robots themselves develop their cognitive abilities, is avoid the “hand” for every conceivable contingency task or [Kovacs, 2004]. Also, if the robots is achieved using representations and reasoning mechanisms similar to that of humans, could improve human-computer interaction and collaborative work. However, it needs a high processing power (especially if the robot has many sensors and actuators) and lots of memory (to represent the state space).

Epigenetic Robotics and Development

This approach is characterized in that tries to implement control systems of general purpose through a long process of development or self-autonomous organization. As a result of interaction with their environment, the robot is able to develop different-and increasingly complex-perceptual skills, cognitive and behavioral.

This is a research area that integrates developmental neuroscience, developmental psychology and robotics located. Initially the system can be equipped with a small set of behaviors or innate knowledge, but, thanks to the experience-is able to create more complex representations and actions. In short, this is the machine to independently develop the skills appropriate for a given particular environment transiting through the different stages of their “autonomous mental development.

The difference between robotics and robotics development epigenetic-sometimes grouped under the term “ontogenetic robotics (ontogenetic robotics) – is a subtle thing, as regards the type of environment. Indeed, while the former refers only to the physical environment, the second takes into account the social environment.

The term epigenetic (beyond the genetic) was introduced in psychology, “by Swiss psychologist Jean Piaget to describe his new field of study that emphasizes the individual sensorimotor interaction with the physical environment, rather than take into account only to genes. Moreover, the Russian psychologist Lev Vygotsky supplemented this idea with the importance of social interaction.

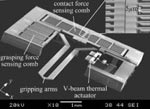

Nanobots gifted sense of touch

Robots at the nanoscale can already do some basic manipulation. But it remained difficult to control to enable them to perform more complex actions such as handling of nanoelectronic components or cells. To overcome this problem, a team of University of Toronto (Canada) announced having developed a pair of robotic grippers can move independently in the middle of a microscopic environment, without damaging the surrounding components. The principle is simple: these micro robots are endowed with the sense of touch. “The robots are equipped with load cells that allow them to perceive their environment through touch,” said L’Atelier Philippe Bidaud, director of the Institute for Intelligent Systems and Robotics (ISIR). “And therefore include data such as weight of an object, the resistance of a membrane,” says he.

Robots at the nanoscale can already do some basic manipulation. But it remained difficult to control to enable them to perform more complex actions such as handling of nanoelectronic components or cells. To overcome this problem, a team of University of Toronto (Canada) announced having developed a pair of robotic grippers can move independently in the middle of a microscopic environment, without damaging the surrounding components. The principle is simple: these micro robots are endowed with the sense of touch. “The robots are equipped with load cells that allow them to perceive their environment through touch,” said L’Atelier Philippe Bidaud, director of the Institute for Intelligent Systems and Robotics (ISIR). “And therefore include data such as weight of an object, the resistance of a membrane,” says he.

Reuse of robotic processes common

According to Sun Yu, project manager, they would be the first to really feel the pressure with which they are grabbing an object and can be taken into account in their operations. “We apply the concepts already used in traditional robotic, but at the nanoscale, announced the researcher. “The experiments above do not elicit such a return. The pliers broke things they took, or break themselves,” says he. Also new: they can feel the proximity of an object and either take it or avoid it to prevent any damage. So many functions that, if the clamps are connected to a computer program containing operations to follow, let them act without human intervention. In tests on animal cells, these would nanobras robotic manipulation and made a damage rate of only 15%. “Giving these nanobots sense of touch makes it possible to achieve the nano-scale manipulation of space we do can do today to the human scale, “adds Philippe Bidaud.

Build sensors premium

And numerous applications, including industrial level. Such systems would in fact assemble microelectronic devices and sensors designed to integrate health appliance or high-tech (PC, mobile phone). A process that can be achieved with conventional manufacturing techniques. Especially it should not be expensive: the clips are made from conventional silicon wafers. If they were mass produced, they may well be offered at 10 dollars fifty pairs. Another use, not least, they have the opportunity to help rebuild body tissues. Finally, recalls Philippe Bidaud, they could play a significant role in therapy. “These systems will know certain properties of cells that we are currently inaccessible,” he concludes. From a technical perspective, each “arm” is about three millimeters long. They can catch cells and components of only ten micrometers wide. This, in less than a second.

Ryobot by Patrick Brennan

RYOBOT is my Rug-Warrior-based robot. It stands about 8 inches high, and is about 6 inches in diameter. It has two-motor differential drive, a 360 degree bump skirt, and the full complement of sensors from the Rug Warrior design. The logic is powered by 4 AA cells and the drive is powered by six rechargeable 2-volt D cells in two batteries of three each.

RYOBOT is my Rug-Warrior-based robot. It stands about 8 inches high, and is about 6 inches in diameter. It has two-motor differential drive, a 360 degree bump skirt, and the full complement of sensors from the Rug Warrior design. The logic is powered by 4 AA cells and the drive is powered by six rechargeable 2-volt D cells in two batteries of three each.

The mechanical design and fabrication was largely dictated by the resources available to me at the time I began this project. The main structure is made of three flat, circular levels, supported by steel threaded rod beams. The levels are made out of sheet styrene, which was chosen because it is easy to cut and relatively strong.

The cylindrical bump skirt is constructed out of aluminum sheet stock, and is supported from the second level of RYOBOT by 18-gauge wire. When complete, RYOBOT’s extrernal appearance should be essentially that of a festive little trash can on wheels.

The wheels of RYOBOT are connected directly to the output shafts of my gearmotors, since I do not expect RYOBOT to carry much weight. The gearmotors are surplus which I got through Edmund Scientific. They are bolted to an aluminum bracket which is in turn bolted to the first level of the robot. The output shaft is connected via a shaft coupler (not shown in the drawings) to a MECCANO shaft which mates with the MECCANO wheel. The shaft couplers were far and away the most difficult part to source for this design, and finding them delayed the construction for a long time. The drive assembly on the first level is the bulk of RYOBOT’s weight.

The Rug Warrior board is the brain of RYOBOT. It is a commercially available circuit board, available from various vendors in various forms:

- (a) as a bare PC board and parts list with assembly directions,

- (b) in kit form with all parts, or

- (c) fully assembled.

Robby by Oualid Burström

Robot arm commanded by a microcomputer. The processor used is a onechip processor from Intel (80c196KB). 12 MHz, 32K RAM, 16K ROM.I use an IBM PC compatible computer to send command sequences via the serial port (LPT1) to the 80c196KB.The 80c196KB transformes the command sequences to pulses which make the robot to move. The PC program is a simulator. You can make a simulation and see how the robot will move. The program language I used to program the 80c196KB and the PC was C. I used Playwood and aluminium to build the robot. The robot is commanded by four R/C servos (HITEC HS-300). Specifications: - A robot-arm commanded by a microcomputer. - The microcomputer: 16Kb ROM, 32Kb RAM, 12MHz. - The microprocessor in the microcomputer is a onechip processor made by Intel, 80C196KB. - A program in a compatible IBM PC simulates the robot-arm's movement. - The command-sequences are then sended to the micorcomputer via COM port. - The microcomputer translates the commands and makes the robot to move. - The robot: Is a four axes robot and has four R/C servos (HITEC HS300). - One for the base, one for the choulder, one for elbow and one for the gripper. - Materials used to build the robot are Playwood and Aluminium. Hardware required for the PC's program : - A PC based on Intel 80386 or highter. - SVGA graphic for GUI. - MS_DOS as OP. - Communication port (COM1 or COM2) for sending/taking data to/from the robot.